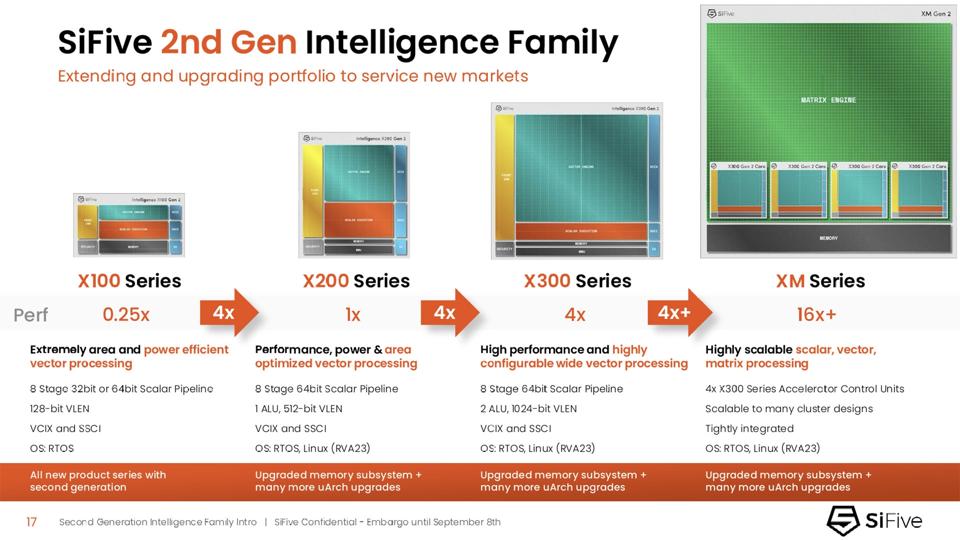

SiFive just announced an array of new additions to its product stack that run the gamut, from tiny, ultra lower-power designs for far edge IoT devices, to more powerful engines for AI data centers, and everything in between. SiFive’s 2nd Generation Intelligence Family of RISC-V processor IP includes five new products — the X160 Gen 2 and X180 Gen 2, both of which are brand-new designs, in addition to upgraded versions of the X280, X390, and XM cores.

The SiFive 2nd Generation Intelligence Family broadens the company’s reach into the far edge and embedded AI spaces, while also improving performance and capabilities to target more demanding inference and training applications in the cloud. The designs are tailored for AI algorithms and offer an improved memory subsystem, featuring configurable caches and new memory latency tolerance mechanisms that help mask latency and maximize throughput under demanding workloads. The SiFive 2nd Generation Intelligence Family also supports additional datatypes, including native support for formats like BF16 (widely used in AI training) and vector crypto extensions, further expanding workload coverage.

SiFive’s 2nd Generation Intelligence Family Offers Expansive Market Coverage

The X160 Gen 2 is optimized for IoT and edge compute and features a particularly small footprint, optimized for energy efficiency. Moving up the stack, the X180 Gen 2 brings additional horsepower for edge inference, while also offering integration options for data center environments. At the higher end, the X280 Gen 2 targets smart home and wearable devices, the X390 targets industrial edge and mobile applications, and XM Gen 2 delivers wide vector and matrix compute capabilities, tuned for large language models (LLMs) and other more intensive applications.

One of the SiFive 2nd Generation Intelligence Family’s defining traits is its vector-enabled RISC-V architecture. Unlike scalar designs, by processing multiple data items in parallel, these vector-enabled cores reduce instruction overhead and power consumption, and they are particularly effective for AI models that utilize smaller data types.

New Interfaces Facilitate SiFive Accelerator Control Units

In addition to updated cores with new capabilities, however, SiFive is also introducing new or updated interfaces with the 2nd Generation Intelligence Family, to enable more advanced integration with AI and other accelerators. The interfaces are dubbed SSCI (SiFive Scalar Coprocessor Interface) and VCIX (Vector Coprocessor Interface). They’re designed to give all of SiFive’s intelligence cores the ability to act as Accelerator Control Units, or ACUs, to manage custom AI accelerators with low latency. The SiFive Scalar Coprocessor Interface can be used to help drive accelerators via RISC-V custom instructions, and it provides direct access to CPU registers and a flexible range of instruction opcode. The Vector Coprocessor Interface provides high bandwidth access to the processor’s vector registers using common vector-type instruction formats.

SiFive claims substantial performance gains for its new products. In MLPerf Tiny benchmarks, the X160 Gen 2 reportedly delivered 2x the performance of competing solutions with a similar small footprint, across workloads like keyword spotting, image classification, and anomaly detection.

Tasks like these are critical for markets like wearables, smart home devices, and industrial edge systems, where compute resources are constrained, but AI capability is increasingly essential. At the other end of the spectrum, the XM Gen 2 scales to thousands of TOPS, making it suitable for data-center-class generative AI workloads. And that’s really the key differentiator of SiFive’s technology – its scalability and customization options mean the processors can be tuned and tailored for a multitude of applications and workloads.

SiFive has also done considerable work in its software stack. The company’s framework integrates with popular AI runtimes and libraries such as TensorFlow Lite, ONNX Runtime, and Llama.cpp, while offering its own compiler extensions and kernel libraries.

The timing of this announcement is also notable. Research cited by SiFive suggests that AI is accelerating RISC-V adoption, with shipments of RISC-V AI processors forecast to climb rapidly over the next several years. Deloitte data points to a 20% increase in AI workloads across all computing domains, with edge workloads projected to rise nearly 80%.

Low Power SiFive Scalar And Vector Compute For A Wide Array Of Applications

While the open RISC-V architecture eliminates licensing costs and enables customization, SiFive’s challenge lies in competing with entrenched incumbents. Companies like Arm, Intel, and NVIDIA already offer AI-optimized processor IP and accelerators with robust software ecosystems. SiFive’s approach, however, which pairs efficient scalar/vector compute with flexible accelerator control interfaces, is a unique proposition. By reducing reliance on proprietary interconnects or middleware, the company positions its IP as both cost-effective and adaptable.

If SiFive can demonstrate that its 2nd Generation Intelligence Family reduces AI development friction while hitting performance and efficiency targets, it could secure a stronger position in edge markets and carve out a role in data center AI deployments as well. That said, success will hinge on execution—particularly in aligning with software frameworks and proving scalability in production silicon, which is expected in Q2 2026.

SiFive will discuss these new cores at the AI Infra Summit in Santa Clara, California, taking place Sept. 9-11 (booth #908). 2nd Generation Intelligence Family cores are already being used by multiple top tier chipmakers, however, and all five of the new products are already available for licensing immediately.