Here we go again. While Google’s procession of critical security fixes and zero-day warnings makes headlines, the bigger threat to its 3 billion users is hiding undercover.

There’s “a new class of exploit” targeting “the top 5 GenAI tools” through a previously overlooked vector: the browser extension.” LayerX says this means “billions could be affected,” with 99% of users “potentially exposed to this attack vector.”

Just 3 weeks ago, SquareX warned that “millions of users have data stolen,” also by malicious or hijacked browser extensions, with security tools having no runtime visibility to “protect users against the rising threat vector.”

You can see the theme here. The primary threat is to Windows PCs, which means Google Chrome which dominates and essentially controls the browser market — at least until the new genre of AI browsers gets any kind of foothold.

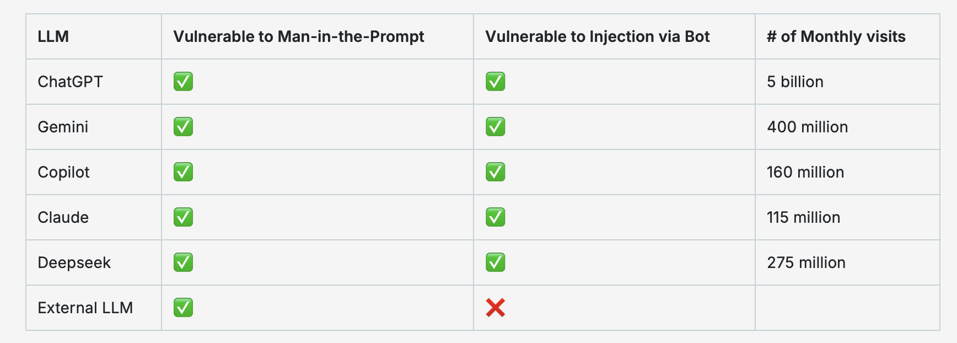

And it’s those same AI platforms that are behind LayerX’s “Man-in-the-Prompt Attack” warning. The team says its research “shows that any browser extension, even without any special permissions, can access the prompts of both commercial and internal LLMs and inject them with prompts to steal data, exfiltrate it, and cover their tracks.”

They warn AI assistants will become “’hacking copilots’ to steal sensitive corporate information.” When you use an AI assistant, “the prompt input field is typically part of the page’s Document Object Model (DOM). This means any browser extension with scripting access to the DOM can read from, or write to, the AI prompt directly.”

We are seeing more noise about direct and indirect prompt injection attacks, where your AI assistant sees what you don’t, as hidden instructions buried within a document or even an online search result trick the AI assistant into doing something it shouldn’t, compromising you, your data and your workplace.

“Because of this tight integration between AI tools and browsers,” LayerX says, “LLMs inherit much of the browser’s risk surface.” This is made worse because your browser extension usually operates with your security credentials, as if it’s you taking its actions. That can include signing into websites, even if the sign-in is a malicious fake.

It’s the same kind of risk that prompted Google to warn that “with the rapid adoption of generative AI, a new wave of threats is emerging across the industry with the aim of manipulating the AI systems themselves.”

Google says “as more governments, businesses, and individuals adopt generative AI to get more done, this subtle yet potentially potent attack becomes increasingly pertinent across the industry, demanding immediate attention and robust security measures.”

The issue LayerX, SquareX and others are highlighting, is that when it comes to browser extensions, those “robust security measures” are non-existent.

Be careful when installing and using extensions and AI tools at the same time.