Several NAND flash manufacturers were discussing higher bandwidth flash technologies at the 2025 FMS in Santa Clara, CA, but the announcement by Sandisk and SK hynix that they would work together to create standards enabling enable high bandwidth flash in an HBM module would enable the next generation of AI training and inference with lower costs and reduced energy consumption.

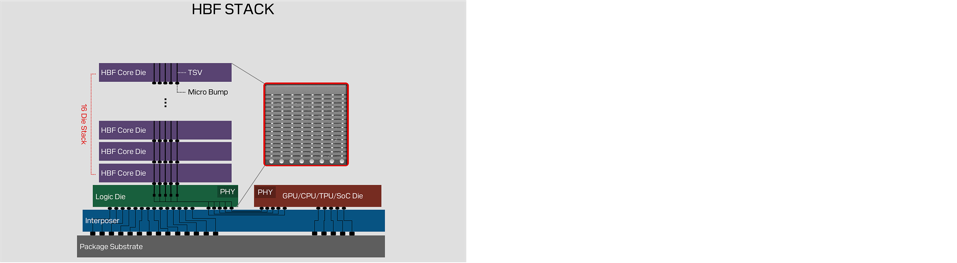

Sandisk announced it has signed a Memorandum of Understanding with SK hynix to work together to drive standardization of High Bandwidth Flash memory technology. HBF is a NAND flash-based solution contained in an HBM package. HBMs are widely used to support the immediate memory needs of GPUs for AI training and inference. HBMs have traditionally used dynamic random-access memory, DRAM, providing very fast access to data. An image of a flash based, HBF module architecture is shown below.

HBF is a new technology designed to deliver breakthrough memory capacity and performance for the next generation of AI inferences and would supplement the traditional DRAM based HBM, providing a lower cost per capacity option to support the HBM DRAM. Through this collaboration with SK hynix, the companies aim to standardize the specification, define technology requirements, and explore the creation of a technology ecosystem for High Bandwidth Flash.

As AI models grow larger and more complex, inference workloads demand both massive bandwidth and significantly greater memory capacity. Designed for AI inference workloads in large data centers, small enterprises and edge applications, HBF is targeted to offer comparable bandwidth to High Bandwidth Memory (HBM) while delivering up to 8-16x the capacity of HBM at a similar cost.

In addition to providing more memory using NAND flash in HBM-like packages, NAND-flash is also a non-volatile memory while DRAM is a volatile memory. That means that DRAM requires regular refreshes of the data it contains and this consumes energy. By substituting some non-volatile memory for what otherwise would be volatile memory in GPUs and AI applications it may be possible to reduce the energy requirements for AI applications in data centers.

This could open up more AI opportunities for AI applications in energy constrained data centers, thus democratizing the development of AI applications. It could also help to reduce the projected power requirements for hyperscale and other data centers to support AI development.

Sandisk also announced the formation of a Technical Advisory Board to guide the development and strategy of its HBF memory technology. The board, consisting of industry experts and senior technical leaders from both within and outside of Sandisk, will provide strategic guidance, technical insight, market perspective, and shape a standards-driven ecosystem.

Enabled by Sandisk’s BiCS technology and proprietary CBA wafer bonding and developed over the past year with input from leading AI industry players, Sandisk’s HBF technology was awarded “Best of Show, Most Innovative Technology” at FMS: the Future of Memory and Storage 2025. Sandisk targets to deliver first samples of its HBF memory in the second half of calendar 2026 and expects samples of the first AI-inference devices with HBF to be available in early 2027.

Sandisk and SK hynix announced that they would work together to create standards for high bandwidth NAND flash, HBF modules to supplement DRAM-based HBM and enabling the next generation of AI.