As AI-accelerated workloads proliferate across edge environments—from smart cities to retail and industrial surveillance—choosing the right inference accelerator has become a mission-critical decision for many businesses. In a new competitive benchmark study conducted by our analysts at HotTech Vision and Analysis, we put several of today’s leading edge AI acceleration platforms to the test in a demanding, real-world scenario: multi-stream computer vision inference processing of high-definition video feeds.

The study evaluated AI accelerators from Nvidia, Hailo, and Axelera AI across seven object detection models, including SSD MobileNet and multiple versions of YOLO, to simulate a surveillance system with 14 concurrent 1080p video streams. The goal was to assess real-time throughput, energy efficiency, deployment complexity and detection accuracy of these top accelerators, which all speak to a product’s overall TCO value proposition.

Measuring AI Accelerator Performance In Machine Vision Applications

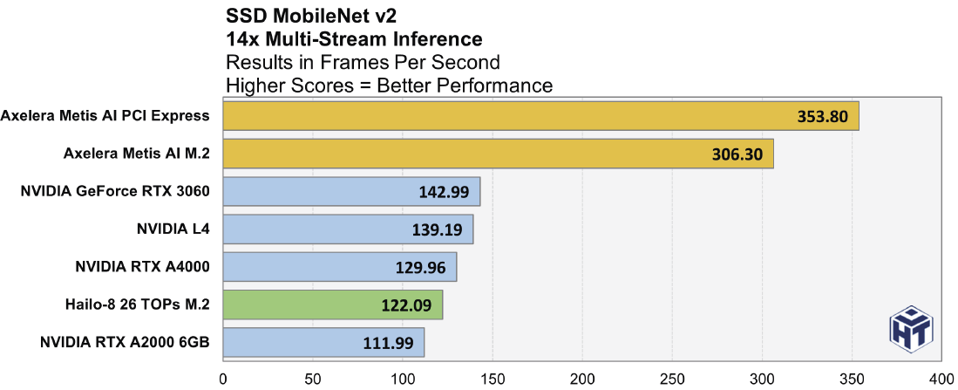

All of the accelerators tested provided significant gains over CPU-only inference—some up to 30x faster—underscoring how vital dedicated hardware accelerators have become for AI inference. Among the tested devices, PCIe and M.2 accelerators from Axelera showed consistently stronger throughput across every model, especially with heavier YOLOv5m and YOLOv8l workloads. Notably, the Axelera PCIe card maintained performance levels where several other accelerators tapered off, and it consistently smoked the competition across all model implementations tested.

That said, Nvidia’s higher-end RTX A4000 GPU maintained competitive performance in certain tests, particularly with smaller models like YOLOv5s. Hailo’s M.2 module offered a compact, low-power alternative, though it trailed in raw throughput.

Overall, the report illustrates that inference performance can vary significantly depending on the AI model and hardware pairing—an important takeaway for integrators and developers designing systems for specific image detection workloads. It also shows how dominant Axelera’s Metis accelerators are in this very common AI inference application use case, versus major incumbent competitors like NVIDIA.

Inferencing Power Efficiency Is Paramount And Axelera Leads

Power consumption is an equally important factor, especially in AI edge deployments, where thermal and mechanical constraints and operational costs can limit design flexibility. Using per-frame energy metrics, our research found that all accelerators delivered improved efficiency over CPUs, with several using under one Joule per frame of inferencing.

Here, Axelera’s solutions out-performed competitors in all tests, offering the lowest energy use per frame in all AI models tested. NVIDIA’s GPUs closed the gap somewhat in YOLO inferencing models, while Hailo maintained respectable efficiency, particularly for its compact form factor.

The report highlights that AI performance gains do not always have to come at the cost of power efficiency, depending on architecture, models and workload optimizations employed.

The Developer Experience Matters And Axelera Is Well-Tooled

Beyond performance and efficiency, our report also looked at the developer setup process—an often under-appreciated element of total deployment cost. Here, platform complexity diverged more sharply.

Axelera’s SDK provided a relatively seamless experience with out-of-the-box support for multi-stream inference and minimal manual setup. Nvidia’s solution required more hands-on configuration due to model compatibility limitations with DeepStream, while Hailo’s SDK was Docker-based, but required model-specific pre-processing and compilation.

The takeaway: development friction can vary widely between platforms and should factor into deployment timelines, especially for teams with limited AI or embedded systems expertise. Here Axelera’s solutions once again demonstrated simplicity in its out-of-box experience and setup that the other solutions we tested could not match.

Model Accuracy and Real-World Usability

Our study also analyzed object detection accuracy using real-world video footage. While all platforms produced usable results, differences in detection confidence and object recognition emerged. Axelera’s accelerators showed a tendency to detect more objects and draw more bounding boxes across test scenes, likely a result of its model tuning and post-processing defaults that seemed more refined.

Still, our report notes that all tested platforms could be further optimized with custom-trained models and threshold adjustments. As such, out-of-the-box accuracy may matter most for proof-of-concept development, whereas other, more complex deployments might rely on domain-specific model refinement and tuning.

Market Implications: Specialization Vs Generalization

Our AI research and performance validation report underscores the growing segmentation in AI inference hardware. On one end, general-purpose GPUs like those from NVIDIA offer high flexibility and deep software ecosystem support, which is valuable in heterogeneous environments. On the other, dedicated inference engines like those from Axelera provide compelling efficiency and performance advantages for more focused use cases.

As edge AI adoption grows, particularly in vision-centric applications, demand for energy-efficient, real-time inference is accelerating. Markets such as logistics, retail analytics, transportation, robotics and security are driving that need, with form factor, power efficiency, and ease of integration playing a greater role than raw compute throughput alone.

While this round of testing (you can find our full research paper here) favored Axelera on several fronts—including performance, efficiency, and setup simplicity—this is not a one-size-fits-all outcome. Platform selection will depend heavily on use case, model requirements, deployment constraints, and available developer resources.

What the data does make clear is that edge AI inference is no longer an exclusive market GPU acceleration. Domain-specific accelerators are proving they can compete, and in some cases lead, in the metrics that matter most for real-world deployments.