The AI world continues to evolve rapidly, especially since the introduction of DeepSeek and its followers. Many have concluded that enterprises don’t really need the large, expensive AI models touted by OpenAI, Meta, and Google, and are focusing instead on smaller models, such as DeepSeek V2-Lite with 2.4B parameters, or Llama 4 Scout and Maverick with 17B parameters, which can provide decent accuracy at a lower cost. It turns out that this is not the case for coders, or more accurately, the models that can and will replace many coders. Nor does the smaller-is-better mantra apply to reasoning or agentic AI, the next big thing.

AI code generators require large models that can handle a wider context window, capable of accommodating approximately 100,000 lines of code. Mixture of expert (MOE) models supporting agentic and reasoning AI is also large. But these massive models are typically quite expensive, costing around $10 to $15 per million output tokens on modern GPUs. Therein lies an opportunity for novel AI architectures to encroach on GPUs’ territory.

Cerebras Systems Launches Big AI with Qwen3-235B

Cerebras Systems (a client of Cambrian-AI Research) has announced support for the large Qwen3-235B, supporting 131K context length (about 200–300 pages of text), four times what was previously available. At the RAISE Summit in Paris, Cerebras touted Alibaba’s Qwen3-235B, which uses a highly efficient mixture-of-experts architecture to deliver exceptional compute efficiency. But the real news is that Cerebras can run the model at only $0.60 per million input tokens and per million output tokens—less than one-tenth the cost of comparable closed-source models. While many consider the Cerebras wafer-scale engine expensive, this data turns that perception on its head.

One question I frequently get is, if Cerebras is so fast, why don’t they have more customers? One reason is that they have not supported large context windows and larger models. Those seeking to develop code, for example, do not want to break down the problem into smaller fragments to fit, say, a 32KB context. Now, that barrier to sales has evaporated.

“We’re seeing huge demand from developers for frontier models with long context, especially for code generation,” said Cerebras Systems CEO and Founder Andrew Feldman. “Qwen3-235B on Cerebras is our first model that stands toe-to-toe with frontier models like Claude 4 and DeepSeek R1. And with full 131K context, developers can now use Cerebras on production-grade coding applications and get answers back in less than a second instead of waiting for minutes on GPUs.”

Cerebras has quadrupled its context length support from 32K to 131K tokens—the maximum supported by Qwen3-235B. This expansion directly impacts the model’s ability to reason over large codebases and complex documentation. While 32K context is sufficient for simple code generation use cases, 131K context enables the model to process dozens of files and tens of thousands of lines of code simultaneously, allowing for production-grade application development.

Qwen3-235B excels at tasks requiring deep logical reasoning, advanced mathematics, and code generation, thanks to its ability to switch between “thinking mode” (for high-complexity tasks) and “non-thinking mode” (for efficient, general-purpose dialogue). The 131K context length allows the model to ingest and reason over large codebases (tens of thousands of lines), supporting tasks such as code refactoring, documentation, and bug detection.

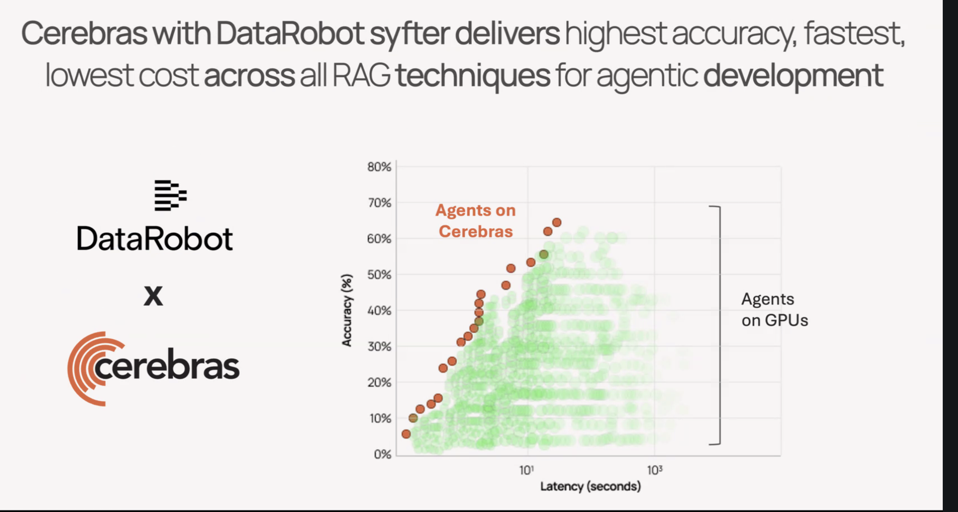

Cerebras also announced the further expansion of its ecosystem, with support from Amazon AWS, as well as DataRobot, Docker, Cline, and Notion. The addition of AWS is huge;

Where is this heading?

Big AI has constantly been downsized and optimized, with orders of magnitude of performance gains, model sizes, and price reductions. This trend will undoubtedly continue, but will be constantly offset by increases in capabilities, accuracy, intelligence, and entirely new features across modalities. So, if you want last year’s AI, you’re in great shape, as it continues to get cheaper.

But if you want the latest features and functions, you will require the largest models and the longest input context length.

It’s the Yin and Yang of AI.