At Computex 25 this week in Taiwan, Nvidia made a strategic move that underscores its broader long-term effort in shaping AI infrastructure: the unveiling of NVLink Fusion. More than just a new interconnect technology, NVLink Fusion marks a strategic shift in Nvidia’s approach to the modern AI data center, positioning the company not only as a supplier of high-performance accelerator and infrastructure solutions, but as a foundational platform for a modular, highly optimized AI-driven computing future.

NVLink Fusion represents a new branch of how Nvidia approaches the cloud data center and hyperscaler markets, empowering partners like Qualcomm, Marvell, Fujitsu and MediaTek to design purpose-built silicon solutions that can interface directly to Nvidia’s Blackwell GPU architecture via the company’s now open and extensible NVLink architecture.

A Shift Toward Heterogeneous AI Factories

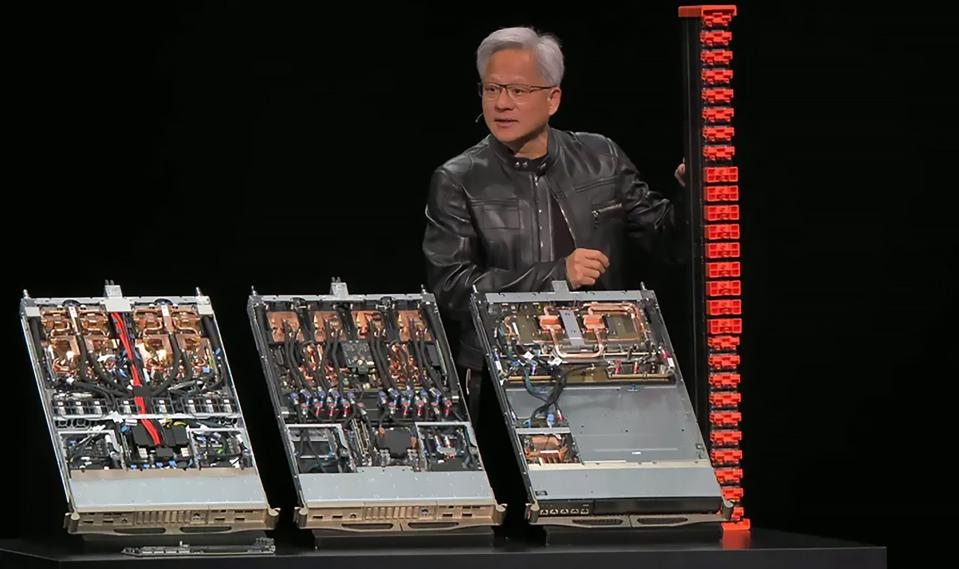

As Jensen Huang, CEO of Nvidia, put it during the reveal: “a tectonic shift is underway.” And indeed this is not an overstatement. For decades, data centers have been standardized around general-purpose CPUs and predictable compute models. However, the rise of trillion-parameter AI models and real-time agentic inference has pushed traditional architectures to the brink. That said, high performance GPUs like Nvidia’s Blackwell architecture are only part of the solution. High speed interconnect that allows GPUs, CPUs and data plane processors to communicate in unison is essential, and that’s where Nvidia’s masterful stroke in its acquisition of high-speed networking and interconnect innovator Mellanox years ago, has paid off in spades.

A derivative of Nvidia’s proprietary high bandwidth NVLink interconnect technology, NVLink Fusion addresses this head-on by allowing third-party silicon, like custom CPUs and accelerators, to be tightly coupled with Nvidia GPUs at the board and rack level. NVLink excels in high-speed, intra-node GPU comms, while Nvidia’s Mellanox InfiniBand and Ethernet technologies enable efficient inter-node communication across cabinets, clusters and data centers.

Notably, Nvidia’s NVLink platform enables up to 1.8 TB/s per GPU in bandwidth—over 14x the bandwidth of PCIe Gen5—and that’s what makes the technology so interesting in partnership with other silicon solution providers.

Who’s Building On Nvidia NVLink Fusion And Why It Matters

Qualcomm is perhaps the most significant surprise in the mix, in my view. Best known for its dominance in mobile and recent entrance into client computing, Qualcomm will now extend its reach into the data center by developing custom CPUs designed to link directly into Nvidia’s AI stack via NVLink Fusion. According to Qualcomm CEO Cristiano Amon, this alignment enables “high-performance, energy-efficient computing” tailored for data center AI deployments. With a track record of power-optimized silicon and a growing stake in AI inference and revenue diversification, Qualcomm’s entry signals how NVLink Fusion is opening the door for new players to compete in data center infrastructure.

MediaTek, already in close collaboration with Nvidia in automotive platforms, is expanding its reach into hyperscale AI infrastructure. With advanced ASIC design services and proven SoC architecture and interconnect experience, MediaTek will strive bring new, efficient compute options to AI factories with the help of NVLink.

Synopsys and Cadence, meanwhile, are moving to enable NVLink Fusion support deep in the chip design process. Their interface IP, chiplet and silicon design tools make it easier for partners to build compliant, production-ready chips that will integrate easily into an Nvidia NVLink-enabled system.

Together, these players, as well as other big name chip companies like Marvell and Fujitsu which also announced support, represent a new wave of modular, bespoke AI infrastructure—configurable, scalable, and optimized for task-specific performance.

Strategic Implications And Market Dynamics

From a business perspective, NVLink Fusion does two big things for Nvidia. It helps solidify its AI ecosystem dominance while simultaneously embracing a more open model. This is a calculated play. Rather than walling off its chip and platform architecture, Nvidia is inviting the industry in—with the caveat that the core still runs through its GPU platform and interconnect fabric.

This “open but owned” model has proven successful many times in the semiconductor industry over the years; maintain control of a standard, but let others innovate on top of it. With NVLink Fusion, Nvidia is doing just that in the data center, but with AI at the center of it all. The timing of this announcement is also strategic. As hyperscalers like AWS, Microsoft, and Google weigh their own in-house silicon strategies, Nvidia is offering a middle path: bring your chips, plug into our infrastructure, and still ride the wave of Nvidia GPU-driven performance.

On the ground, the potential market impact is significant, and it could accelerate adoption of Nvidia platforms across industries that have struggled with the cost and complexity of scaling.

Final Take-Away

NVLink Fusion may not be flashy on the surface; it’s just silicon plumbing after all. But underneath, it signals a fundamental rearchitecting of how AI infrastructure could be built. Over the years, Nvidia has evolved from a chip company into the backbone of AI-scale computing. And with NVLink Fusion, it’s inviting the rest of the industry to build alongside it.

If you’re watching where the next few years of AI infrastructure is headed, look closely at this Nvidia announcement. It’s less about speeds and feeds, and more about who might build what, and on whose terms.