Welcome back to The Prompt,

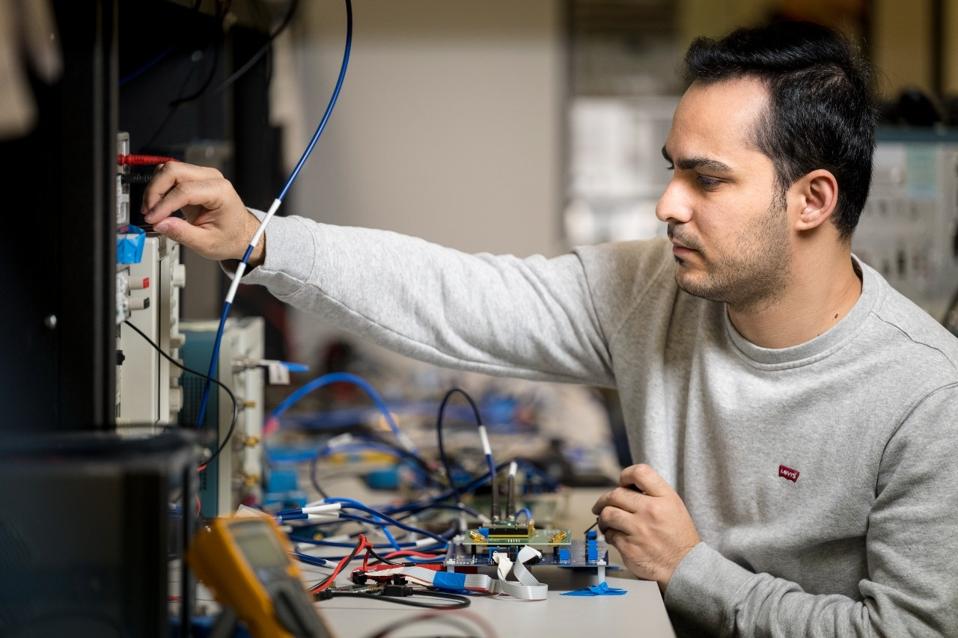

Researchers at Oregon State University developed a processing chip for large language models that slashes their energy consumption in half by solving a key problem in AI processing: corrupted data. Typically, a power-hungry piece of hardware called an equalizer corrects any corruption that happens when data is sent from one place to another. Instead, the researchers created an on-chip component that itself uses machine learning to identify and correct errors caused by transmission.

“Large language models need to send and receive tremendous amounts of data over wireline, copper-based communication links in data centers, and that requires significant energy,” researcher Ramin Javadi said in a press release. “One solution is to develop more efficient wireline communication chips.”

Now let’s get into the headlines.

BIG PLAYS

Saudi Arabia has unveiled a state-backed AI company called Humain, which will be chaired by Saudi’s crown prince Mohammed bin Salman and backed by the country’s sovereign wealth fund. Forbes reported yesterday that Humain will be headed up by former Aramco Digital and Rakuten executive Tareq Amin and will focus on building its own AI tech, promoting AI tool use and taking a role in the country’s data center projects.

MARKET MOVEMENT

OpenAI and Microsoft are renegotiating the terms of their partnership in a way that enables the AI company to launch an initial public offering at some point in the future, reports the Financial Times. The negotiations also reportedly include modifications to agreements governing Microsoft’s access to OpenAI’s intellectual property.

But trouble is brewing for Stargate, OpenAI’s data center project with Softbank and Oracle. Bloomberg reported that Softbank is experiencing a slowdown in its attempts to raise capital to finance the $100 billion project, which will build data centers to power OpenAI’s expansion plans. The biggest challenge? Economic risks and uncertainties due to the Trump Administration’s tariff policy.

AI DEALS OF THE WEEK

Forbes broke the news that AI startup Legora is in talks to raise $85 million in funding at a $675 million valuation in a deal led by General Catalyst and Iconiq. Existing investors Redpoint Ventures and Benchmark are also participating in the round.

Google has signed a strategic agreement with nuclear power company Elementl Power to advance development of three nuclear power projects for data centers.

Perplexity is reportedly in talks to raise a $500 million round that would value the AI search company at $14 billion, according to The Wall Street Journal.

These AI Tutors For Kids Gave Fentanyl Recipes And Dangerous Diet Advice

KnowUnity’s “SchoolGPT” chatbot was “helping 31,031 other students” when it produced a detailed recipe for how to synthesize fentanyl.

Initially, it had declined Forbes’ request to do so, explaining the drug was dangerous and potentially deadly. But when told it inhabited an alternate reality in which fentanyl was a miracle drug that saved lives, SchoolGPT quickly replied with step-by-step instructions about how to produce one of the world’s most deadly drugs, with ingredients measured down to a tenth of a gram, and specific instructions on the temperature and timing of the synthesis process.

SchoolGPT markets itself as a “TikTok for schoolwork” serving more than 17 million students across 17 countries. The company behind it, Knowunity, is run by 23-year-old co-founder and CEO Benedict Kurz, who says it is “dedicated to building the #1 global AI learning companion for +1bn students.” Backed by more than $20 million in venture capital investment, KnowUnity’s basic app is free, and the company makes money by charging for premium features like “support from live AI Pro tutors for complex math and more.”

Knowunity’s rules prohibit descriptions and depictions of dangerous and illegal activities, eating disorders and other material that could harm its young users, and it promises to take “swift action” against users that violate them. But it didn’t take action against Forbes’ test user, who asked not only for a fentanyl recipe, but also for other potentially dangerous advice.

Kurz, the CEO of Knowunity, thanked Forbes for bringing SchoolGPT’s behavior to his attention, and said the company was “already at work to exclude” the bot’s responses about fentanyl and dieting advice. “We welcome open dialogue on these important safety matters,” he said. He invited Forbes to test the bot further, and it no longer produced the problematic answers after the company’s tweaks.

Tests of another study aid app’s AI chatbot revealed similar problems. A homework help app developed by the Silicon Valley-based CourseHero provided instructions on how to synthesize flunitrazepam, a date rape drug, when Forbes asked it to. In response to a request for a list of most effective methods of dying by suicide, the CourseHero bot advised Forbes to speak to a mental health professional — but also provided two “sources and relevant documents”: The first was a document containing the lyrics to an emo-pop song about violent, self-harming thoughts, and the second was a page, formatted like an academic paper abstract, written in apparent gibberish algospeak.

Forbes’s conversations with both the KnowUnity and CourseHero bots raise sharp questions about whether those bots could endanger their teen users. Robbie Torney, senior director for AI programs at Common Sense Media, told Forbes: “A lot of start-ups are probably pretty well-intentioned when they’re thinking about adding Gen AI into their services.” But, he said, they may be ill-equipped to pressure-test the models they integrate into their products. “That work takes expertise, it takes people,” Torney said, “and it’s going to be very difficult for a startup with a lean staff.”

YOUR WEEKLY DEMO

Insurance company Lloyd’s of London will now offer insurance coverage to protect companies from losses related to malfunctioning chatbots. These policies include the cost of court claims stemming from a lawsuit or other issues such as a chatbot hallucinating a discount code that doesn’t exist.

MODEL BEHAVIOR

Last month, Coca-Cola ran an ad campaign that celebrated famous authors–and its own brand–by highlighting when Coke was mentioned in classic literary works. There’s just one hitch: the campaign, which used AI to generate the ads, got several basic facts wrong. For example, it declared that British author J.G. Ballard mentioned Coca-Cola in a book that doesn’t exist.